AiPrise

11 min read

January 16, 2026

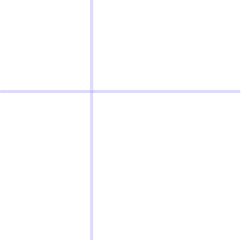

Understanding Liveness Detection Deepfake Defense Strategies

Key Takeaways

The moment a face appears on your screen, how certain are you that it belongs to a real person? That question now sits at the centre of digital trust.

A Medius study found that over half of finance professionals in the U.S. and U.K. have been targets of a deepfake-powered financial scam, with 43% falling victim to such attacks. The cryptocurrency sector has been particularly affected, with deepfake-related incidents increasing by 654% from 2023 to 2024.

For businesses, understanding liveness detection is critical: weak defences can lead to identity fraud, financial loss, and regulatory penalties.

This blog explains how liveness checks work, how deepfake attacks bypass weak controls, and what effective defence strategies look like in practice. If protecting customers, revenue, and compliance matters to your organisation, this is where that understanding begins.

Key Takeaways

- Liveness detection confirms real human presence, blocking deepfakes and synthetic identities.

- Combining liveness with document checks, behavioural biometrics, and risk scoring strengthens overall fraud defence.

- AiPrise uses real-time liveness and Fraud & Risk Scoring to detect fraud before it impacts business.

- Passive and hybrid liveness approaches reduce user friction while maintaining security.

- Continuous model updates keep verification effective against evolving AI-driven attacks.

What Are Deepfakes and Why Do They Matter?

Deepfakes are synthetic images, videos, or audio created using advanced artificial intelligence models that can convincingly replicate a real person’s face, voice, or expressions. Unlike traditional spoofing methods, deepfakes are dynamic, adaptive, and increasingly difficult to distinguish from genuine human presence.

Here’s what makes modern deepfakes especially dangerous:

- AI-generated realism: Deep learning models can recreate natural eye movement, facial micro-expressions, and voice tone.

- Low barrier to creation: High-quality deepfake tools are now widely available and inexpensive.

- Scalability: One stolen identity can be replicated and reused across multiple platforms.

Why This Crushes Your Business?

Deepfakes erode digital trust at the exact moments where certainty matters most. From onboarding and video KYC to account recovery and high-value transactions, they enable attackers to present highly convincing but entirely fabricated identities.

For businesses, the impact is both operational and strategic:

- Customer onboarding risks: Fraudsters use deepfakes to bypass identity checks, allowing synthetic or stolen identities to enter core systems undetected.

- Financial exposure: Deepfake-enabled impersonation increases the risk of account takeovers, unauthorised transactions, and fraudulent payouts.

- Compliance and regulatory pressure: Failed identity verification can result in non-compliance with KYC and AML requirements, leading to audits, penalties, and remediation costs.

- Erosion of brand trust: When customers lose confidence in a platform’s ability to protect their identity, trust declines quickly and is difficult to rebuild.

If deepfakes exploit the gap between what looks real and what actually is, liveness detection is designed to close that gap.

What is Liveness Detection?

Liveness detection (aka Presentation Attack Detection or PAD) is a biometric security mechanism used to verify that a real, live human being is present during an identity check, rather than a photo, video replay, mask, or AI-generated deepfake. Its purpose is not to identify who the person is, but to confirm that the person is genuinely there in real time.

At a technical level, liveness detection analyses subtle signals that are difficult for synthetic media to reproduce consistently.

Liveness detection actually evaluates:

.png)

- Physiological cues: Natural eye movement, blinking patterns, and micro-expressions that occur involuntarily in live humans.

- Depth and spatial signals: 3D facial structure and distance between facial features, helping distinguish real faces from flat or digitally injected media.

- Texture and light response: How skin reflects light, including variations caused by blood flow and surface texture.

- Behavioural consistency: Natural head movement, posture changes, and response timing.

Liveness detection operates as a protective layer within broader biometric and KYC workflows:

- Prevents spoofing attempts before face matching or document checks occur.

- Reduces false approvals caused by high-quality deepfake videos.

- Strengthens compliance with biometric security and anti-spoofing standards.

Liveness detection sounds simple, but its types deliver wildly different security and user flows, and if you pick the wrong one, fraud slips through.

Types of Liveness Detection

.png)

Liveness detection methods are generally classified based on how they confirm real human presence and how much user interaction they require. Understanding these differences is critical when selecting a defence strategy that balances security, accuracy, and user experience.

1. Active liveness detection

Active liveness detection verifies human presence by asking users to perform specific actions in real time. These actions may include blinking, smiling, turning the head, or following on-screen movement cues.

The system then evaluates whether the response aligns with natural human behaviour, expected reaction timing, and physical movement patterns. The underlying assumption is that pre-recorded or static media will fail to respond accurately to these dynamic prompts.

Strengths

- Effective at stopping basic spoofing attempts, such as printed photos or static images.

- Provides visible confirmation of real-time user participation during verification.

Limitations

- Increasingly vulnerable to advanced deepfake videos that are trained to replicate prompted actions.

- Introduces user friction, which can slow onboarding and increase abandonment rates, particularly in mobile-first or high-volume environments.

2. Passive liveness detection

Passive liveness detection verifies real human presence without requiring any deliberate action from the user. Instead, it operates in the background, analysing visual and behavioural signals captured during a normal selfie or video interaction.

These signals include subtle facial micro-movements, skin texture variations, depth cues, light reflection patterns, and frame-level inconsistencies that are difficult for synthetic media to reproduce consistently. Machine learning models assess these signals in real time to determine whether the input originates from a live person or from a spoofing attempt such as a deepfake or injected video feed.

Strengths

- Highly effective against advanced video replays and AI-generated deepfakes.

- Creates a smooth, low-friction user experience with faster completion rates.

Limitations

- Requires sophisticated AI models and high-quality training data to maintain accuracy.

- It may be harder for users to understand or trust if the process is not clearly explained.

3. Hybrid liveness detection

Hybrid liveness detection combines passive analysis with active challenges to strengthen overall decision accuracy. In this approach, passive liveness runs continuously in the background, evaluating risk signals throughout the verification session. Active prompts are introduced only when suspicious behaviour or elevated risk is detected. This adaptive model allows systems to respond dynamically to potential threats rather than applying the same level of scrutiny to every user.

Strengths

- Balances strong security with minimal user friction.

- Adapts to risk in real time, making it more resilient against evolving deepfake techniques.

Limitations

- More complex to implement and integrate into existing verification workflows.

- Requires careful tuning to avoid unnecessary challenges for legitimate users.

Understanding the types of liveness detection is only part of the picture; what truly determines effectiveness is the technology operating beneath the surface.

How Liveness Detection Works: The Technology Stack

.png)

Liveness detection relies on a layered technology stack that evaluates multiple signals simultaneously to distinguish a real human from a spoof or deepfake. Rather than depending on a single indicator, modern systems combine computer vision, biometric science, and AI decisioning to reduce false approvals.

Here’s a complete breakdown of how the liveness detection system works:

1. Image and video capture layer

The liveness detection process begins at the point of capture, usually through a device camera during a selfie or video session. At this stage, the system evaluates whether the incoming feed is suitable for reliable analysis and whether it shows signs of manipulation.

At this stage, the system looks for:

- Frame quality, motion stability, and lighting consistency.

- Early indicators of replay attacks or injected video feeds.

2. Facial feature and depth analysis

Once the image or video is accepted, the system examines the spatial structure of the face to confirm physical presence. This analysis focuses on whether the face exhibits realistic three-dimensional characteristics rather than the flat or distorted appearance common in spoofing attempts.

Specifically, the system evaluates:

- 3D depth cues and facial geometry.

- Natural proportions and alignment of facial features.

- Consistency of depth information across multiple frames.

3. Texture and light reflection analysis

At this layer, the system assesses how light interacts with the surface of the face at a detailed level. Real human skin reflects light in complex, uneven ways that are difficult for deepfake models to reproduce accurately over time.

The analysis focuses on:

- Skin texture details such as pores and surface irregularities.

- Subtle changes in light reflection as the head moves.

- Visual artefacts like over-smoothing or unnatural shading.

4. Micro-movement and behavioural signals

Live humans display continuous, involuntary movements that are difficult to simulate precisely. This layer examines behavioural patterns to confirm natural motion coherence throughout the verification session.

The system observes:

- Blinking cadence and eye focus shifts.

- Minor muscle movements and posture adjustments.

- Timing alignment between movement and camera capture.

5. AI classification and risk scoring

In the final stage, all extracted signals are combined and evaluated using machine learning models trained on both legitimate interactions and known attack patterns. Rather than relying on a single indicator, the system weighs multiple signals to form a holistic assessment of liveness.

The models:

- Assign a confidence score representing the likelihood of real human presence.

- Apply risk thresholds to determine pass, fail, or step-up actions.

- Continuously evolve through retraining to keep pace with emerging deepfake techniques.

Also read: How to Detect Financial Services Fraud: A Practical Guide for Businesses

What truly sets liveness detection apart is not the sophistication of deepfakes, but how effectively liveness exposes what those deepfakes cannot fake.

How Liveness Outsmarts Every Deepfake Trick

Deepfake attacks succeed by imitating the visual appearance. Liveness detection defeats them by analysing real-time human presence, where synthetic media consistently falls short. Instead of relying on surface-level resemblance, liveness systems examine behaviour, physical response, and environmental coherence across the entire interaction.

Here are some of the most common deepfake attack techniques, and how liveness stops them:

Static Images And Printed Photos

These attacks present a single, flat visual to the camera. Liveness detection quickly identifies the absence of facial depth, natural light interaction, and involuntary movement, rejecting the attempt at the earliest stage of verification.

Video Replay Attacks

Pre-recorded videos may show blinking or head movement, but they lack true real-time interaction. Liveness detection uncovers replay attempts by analysing response latency, frame repetition patterns, and inconsistencies in motion continuity.

AI-Generated Deepfake Videos

Advanced deepfakes can follow prompts and mimic expressions, but they struggle to maintain realistic micro-level signals. Liveness detection reveals subtle anomalies in skin texture, light reflection, depth consistency, and behavioural rhythm across frames.

Injected or Manipulated Camera Feeds

More sophisticated attacks bypass the camera by injecting synthetic video directly into the verification flow. Liveness detection detects these attempts by validating capture integrity, sensor behaviour, and environmental signals that software-generated feeds cannot replicate.

Also Read: Guide to Understanding Fraud Detection Rules

Liveness detection closes one of the most critical gaps in identity verification, but on its own, it is not designed to carry the full weight of modern fraud defence.

Multi-Layered Defence: Beyond Liveness Detection

While liveness detection is essential for stopping deepfakes at the point of entry, sophisticated fraud operations rely on multiple tactics across the user journey. A resilient security strategy, therefore, combines liveness with complementary controls that assess identity, behaviour, and context over time.

Deepfake attacks rarely occur in isolation. Once an attacker passes initial verification, they often attempt to manipulate accounts, abuse transactions, or repeatedly access the account from different devices. A multi-layered defence ensures that risk is continuously evaluated to be resolved after onboarding.

Key layers that strengthen liveness detection:

- Document and identity verification: Validates government-issued IDs and supporting documents, detecting tampering, forgery, and data inconsistencies before biometric checks are finalised.

- Behavioural biometrics: Monitors how users interact with devices over time, analysing patterns such as typing rhythm, navigation behaviour, and session dynamics to identify anomalies.

- Device and network intelligence: Assesses device fingerprints, IP reputation, geolocation changes, and network behaviour to identify high-risk access attempts or automated fraud activity.

- Risk-based authentication: Adjusts verification requirements dynamically, introducing step-up checks only when risk signals increase, rather than applying friction uniformly.

In practice, liveness detection works best as the first line of defence within a broader, adaptive security framework built to respond to evolving deepfake and fraud tactics.

Use AiPrise User Verification together with Fraud & Risk Scoring and liveness detection to confirm real human presence, validate identity documents, and assess risk signals in real time.

Liveness detection secures the human front door, but to truly safeguard your business, you also need intelligence that detects risk patterns beyond that first check.

Why AiPrise Stands Out as the Better Option

AiPrise is an AI-powered identity and compliance platform designed to help businesses verify users, prevent fraud, and manage risk with precision and scale. AiPrise’s Fraud & Risk Scoring offering specifically addresses sophisticated identity threats, including deepfakes, synthetic accounts, and bot-driven abuse, by analysing a wide range of data signals to score and mitigate risk at onboarding and beyond.

Fraud & Risk Scoring in AiPrise goes beyond simple authentication checks. It evaluates multiple facets of a user’s digital footprint and interaction context, providing a dynamic risk assessment that feeds into real-time decisions. Instead of treating each user as a binary “pass/fail”, this product calculates nuanced risk scores based on:

- Email and phone Verification: Age of email accounts, phone activity data and deliverability status help flag disposable or suspicious contact information.

- IP and device intelligence: Accurate location understanding and device fingerprinting identify anomalies such as VPN usage, bot traffic, or inconsistent device behaviour.

- Custom risk scoring rules: Businesses can tailor the scoring logic to their unique risk tolerance, applying their own thresholds and rule sets.

- Pre-built risk rules: A library of ready-to-use scenarios accelerates setup and covers common fraud patterns without heavy engineering effort.

If deepfakes and identity fraud are already on your risk radar, now is the time to move from awareness to prevention.

See how AiPrise’s Fraud & Risk Scoring works alongside liveness detection to identify real users, surface hidden risk, and stop sophisticated attacks before they escalate.

Visit the AiPrise website today and understand what smarter, real-time risk decisions look like for your business.

Summing Up

Deepfakes have fundamentally changed how digital identity fraud operates, making appearance-based verification unreliable on its own. Liveness detection addresses this shift by confirming real human presence, while layered controls and intelligent risk scoring ensure threats are identified throughout the user journey. Together, these defences help businesses protect trust, reduce fraud exposure, and meet evolving regulatory expectations without adding unnecessary friction.

If securing digital identities is a priority for your organisation, the next step is clarity. Explore how AiPrise combines liveness detection with real-time fraud and risk scoring to deliver stronger protection and smarter decisions. Book a Demo with AiPrise to see this approach in action.

FAQs

1. Can liveness detection work reliably on low-end or older devices?

Yes. Modern liveness systems are designed to adapt to varying camera quality and network conditions by focusing on behavioural and texture-based signals rather than hardware-dependent features alone.

2. How does liveness detection handle poor lighting or unstable environments?

Advanced systems assess environmental quality in real time and adjust confidence thresholds or trigger step-up checks when conditions could affect accuracy.

3. Does liveness detection store biometric data permanently?

Most enterprise-grade solutions process biometric data transiently and store only encrypted, policy-compliant outputs, aligning with data minimisation and privacy requirements.

4. How often should liveness detection models be updated?

Models should be updated continuously or at frequent intervals to stay effective against newly emerging deepfake techniques and generative AI patterns.

5. Can liveness detection be applied beyond onboarding use cases?

Yes. It is increasingly used for account recovery, high-risk transactions, and re-authentication to prevent impersonation after initial verification.

You might want to read these...

AiPrise’s data coverage and AI agents were the deciding factors for us. They’ve made our onboarding 80% faster. It is also a very intuitive platform.

.png)

.png)

.png)

.png)

.png)